Artificial Intelligence in Healthcare Education and Professional Development (AI learning perspective 1)

Where Do We Stand and Where Do We Go

Written By: Unmila P Jhuti, PhD

Edited By: Imtiaz Ibne Alam, RPh

Artificial Intelligence (AI) is transforming the way we learn, teach, and assess educational outcomes. From programmers and language learners to teachers, medical writers, and healthcare professionals, AI is becoming everyone’s favorite tool for learning. While there’s much hype about AI innovations, many are skeptical whether it may turn out to be like the dot-com bubble of the 2000s. In fact, recent developments in the field of AI raise a more serious question: Do AI tools really hold significant promise, and if so, can they deliver on that promise?

Research/Evidence

A recent review- “Towards an AI-Literate Future” explores how AI literacy is being discussed, taught, and integrated in educational research, which is also relevant for medical writers. It emphasizes that literacies around AI are no longer optional but essential. Anyone interested in learning new things needs to both embrace and understand how to use AI tools, recognize the biases associated with them, and consider their ethical and social implications. Research shows that the use of AI in curricula can help learners improve critical thinking, interdisciplinary problem-solving, and real-world applications.

A quantitative mixed-method study finds that AI applications are being used in primary education for interactive learning media, with positive impacts on engagement and achievement. This means that AI can become a strong and helpful tool to train children from a very young age. And AI has something for everyone. For example, for the newbie medical writers, the recent hyped-up AI-assisted tool is Self-Directed Learning (SDL). A comprehensive systematic study shows that AI tools are enhancing SDL by offering helpful feedback and personalized pacing, especially in online environments.

Beyond the generalized education, AI is also changing how healthcare professionals and regulatory specialists are trained. Evidence shows both the promise and the challenges of AI in these fields. AI systems are being used to provide personalized tutoring for medical students. For example, natural language processing (NLP)–based tutors can deliver case-based scenarios, simulate patient interactions, and adjust difficulty according to learners’ responses. A 2025 systematic review in BMC Medical Education found that AI-based teaching improved knowledge scores and diagnostic reasoning, though effects varied depending on the tool and instructional design.

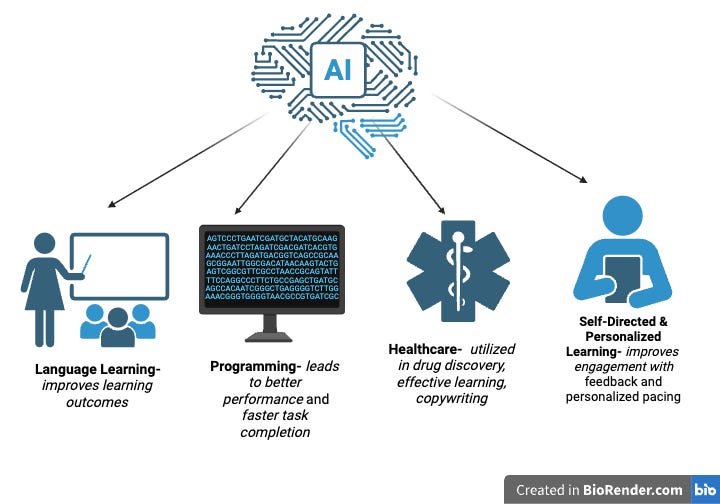

Not only medical learning, but generative AI models are also being used for regulatory drafting, compliance checking with regulatory templates, and aligning with ICH/GCP guidelines. AI acts as a central hub for learning, extending across language acquisition, programming, healthcare education, and self-directed learning (Figure 1).

Figure 1: AI as a Catalyst for Learning Across Domains

What are the most common challenges with AI-based learning?

With the broad implications comes the burden of being factual about the subject matter. The biggest challenge with AI-directed learning is to ensure that the assisting AI does not get in the way of true assimilation of the subject matter. And there is contradictory evidence for this claim. For example, high school students using a basic GPT-4-powered tutor did well during use, but when access was removed, they scored significantly lower than peers who never used AI. Conversely, a class of undergraduates used AI tools to practice their class debates and performed better than the group that practiced without AI. Perhaps using AI as an assistant rather than a guide is the way to go if we analyze this matter closely.

The second biggest challenge with Generative AI is that sometimes it produces inaccurate or misleading output, AKA “hallucinations.” Also, it often struggles with technical subjects. This can be a problem for a medical writer, who is trying to draft an article with the help of AI. To tackle such problems, we need tools tailored for specific fields, standard practices, and policies. Training programs must teach new learners to validate and fact-check all AI outputs to avoid hallucinations or misinterpretations.

We also need to ask about the long-term behavioral impact of AI on metacognitive processes: how AI affects motivation, critical thinking, and self-regulation. For example, are new learners less likely to engage deeply if AI tools give quick answers?

Are there any laws, and if so, how will they shape AI learning in the domain?

AI is still the new kid on the block when it comes to regulation, so laws concerning it keep updating. Recently, the FDA released premarket guidance for AI-enabled software. This has broad implications for professionals like physicians, teachers, medical writers, and engineers. The software helping in research/diagnosis or simulating programming decisions will now require case-specific evidence and documentation plans to ensure authenticity.

Data-protection rules and journal policies will now get stricter with consent so that no unconsented personal health information slips through. Teaching will shift toward verifying AI-generated claims, tracing sources, and documenting where generative text came from. Training platforms will integrate compliance checks (e.g., checking citations) as part of feedback to reduce misuse. Medical writers must understand how to use AI responsibly in drafting documents that can affect data interpretation and regulatory filings.

Code generators used in classroom settings will need to track the provenance of training data and ensure no secret keys / proprietary code leak. As code-generation tools become common, programs will emphasize testing, code review, and reproducibility, so the students can audit AI-suggested code. Such laws are supposed to be imposed not only on the professionals but also on governing bodies (see TRAIGA law below).

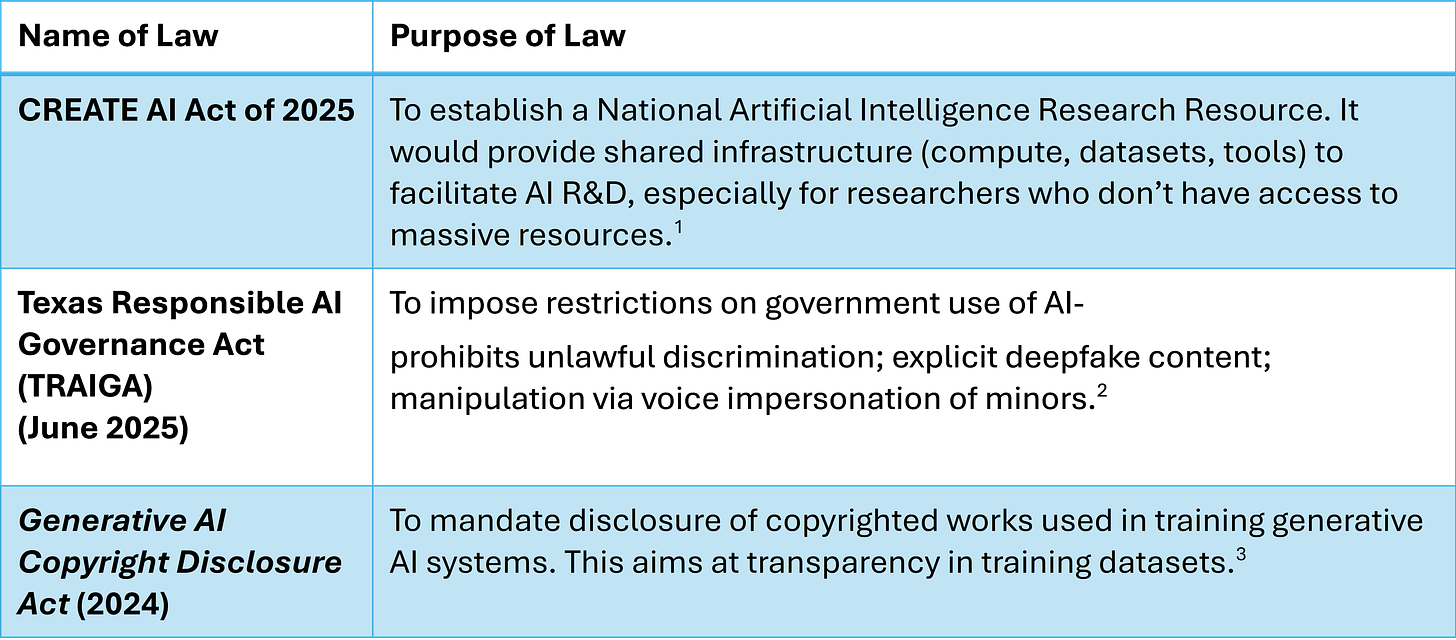

In summary, these new laws will shift the mode of teaching from “how to write yourself” to “how to evaluate, test, and document AI-generated information” (Table 1).

Table 1: Recent Laws that May Affect Learning Methods

Personal Perspective

What do the strict AI regulations mean for both seasoned and newbie writers?

I believe learning itself is going to get easier, but the overall planning, learning methods, and editing are going to meet stricter regulations with additional laws being introduced to AI-based writing. More step-by-step record keeping will be required. That means everything from structure planning to editing will need to be documented. The learning methods will shift towards understanding how to use AI or how to evaluate AI responses using evidence-based research.

Editing will be rigorous and detailed, focusing more on the writer’s ability to assess AI responses using their subject-matter expertise and critical thinking. However, the use of AI in high-risk medical writing fields such as healthcare training or patient-facing CME education, and regulatory submissions may still require human review and multiple fact-checking phases until we find a solution to the ‘hallucination’ issue in these tools. Finally, the learning process across all professional domains will place greater emphasis on AI ethics, mandates, and best practices.

What does the future hold for the overall learning experience?

In an ideal future, I believe AI tutors will provide personalized learning focusing on the user’s strengths and weaknesses. It will be like having a personal mentor available 24/7 who spurs critical thinking and creativity in the mentees. In healthcare and medical writing, this means learning at your own pace while developing strong fact-checking skills; in programming, debugging assistants that can fix failed codes.

Next-gen AI systems won’t just handle text, they’ll process speech, images, video, and even complex datasets. It is high time we embraced the fact that AI is going to change the course of learning, and hopefully, together with human intervention, it can reshape education into a journey of curiosity and growth.

Key Takeaways

AI is transforming learning for professionals across multiple fields.

Integration of AI can boost critical thinking, problem-solving, and real-world application.

AI works best as an assistant; learners must verify outputs and avoid overreliance.

Generative AI can produce inaccurate content, requiring domain-specific tools and fact-checking.

New laws and regulations shift teaching toward evaluating, testing, and documenting AI-generated work.

Future AI tutors will personalize learning, support creativity, and handle complex multimedia scenarios.

Other Voices on This Topic

Consider reading these additional articles for unique perspectives and more advice.

How AI Tools Affect Learning and Cognitive Skills in New Medical Writers (AI learning perspective 2)

Preserving critical thinking and cognitive skills while using AI

References

Biagini G. Towards an AI-literate future: a systematic literature review exploring education, ethics, and applications. Int J Artif Intell Educ. 2025:1–51.

Feigerlova E, Hani H, Hothersall-Davies E. A systematic review of the impact of artificial intelligence on educational outcomes in health professions education. BMC Med Educ. 2025;25(1):129.

Jaramillo JJ, Chiappe A. The AI-driven classroom: a review of 21st century curriculum trends. Prospects. 2024;54(3):645–660.

Library of Congress. H.R. 2385, 119th Cong. (2025). March 26, 2025.

Slade JJ. AI in education: enhancing learning or eroding critical thinking? Oregon State University Teaching Blog. August 12, 2025.

Topkaya Y, Doğan Y, Batdı V, Aydın S. Artificial intelligence applications in primary education: a quantitatively complemented mixed-meta-method study. Sustainability. 2025;17(7):3015.

U.S. Food and Drug Adminitration. Artificial intelligence and software as a medical device (SaMD). Accessed March 25, 2025.

White &Case LLP. I Watch: global regulatory tracker – United States. September 24, 2025.

Younas M, El-Dakhs DAS, Jiang Y. A comprehensive systematic review of AI-driven approaches to self-directed learning. IEEE Access. 2025.

AI Disclaimer

ChatGPT was used to search for and draft the section on recent laws and regulations related to AI-based learning. Grammarly’s AI tool was used to edit the blog post.

Contributions of Blog Creator

Nicole Bowens, PhD developed the topic for this article. She created this blog to bring together perspectives from medical writers at all experience levels, with the goal of supporting those who are aspiring or early in their careers.

If you are interested in contributing to the blog as a writer or editor, fill out the Google form application, and you will receive a follow-up email with further instructions.

About the Contributing Author

This article was written by Unmila P Jhuti, PhD, a trained research scientist, AI analyst, and medical writer. With expertise in neurodegenerative diseases, oncology, and metabolic disorders, Dr. Jhuti is also an accomplished science communicator who specializes in translating complex research into accessible, engaging, and evidence-based content for a broad audience.

Contributor Contact Info

Writer: Unmila P Jhuti, PhD

📧 Email: unmila267@gmail.com

🔗 LinkedIn: www.linkedin.com/in/unmila-p-jhuti-ph-d-677a29149

Editor: Imtiaz Ibne Alam, RPh

🔗 LinkedIn: linkedin.com/in/imtiazibnealam

Wow, the part about AI literacy being essential resonated with me. As a teacher, I see this daily. How do you think we can best integrate AI literacy programs without overwhelming current curriculas? Excellent points made, very insightful!